In the realm of machine learning (ML), random variables play a crucial role in shaping the performance and capabilities of various algorithms. Understanding their influence is essential for advancing ML technologies and developing innovative solutions. This article explores how random variables impact machine learning algorithms, focusing on current developments and innovations in the field.

Understanding Random Variables

A random variable is a fundamental concept in probability theory, representing a numerical outcome of a random phenomenon. It can be discrete, with distinct values, or continuous, covering a range of values. In the context of machine learning, random variables often model uncertainty and variability, influencing algorithm performance in several ways.

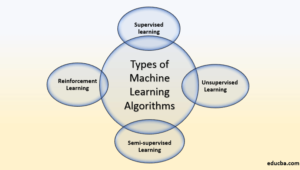

Role of Random Variables in Machine Learning

- Data Generation and Simulation

Random variables are pivotal in generating synthetic datasets and simulations, crucial for training machine learning models. By introducing variability into data generation, ML practitioners can create diverse and representative datasets. This helps in training models that generalize well to unseen data.

- Feature Selection and Engineering

In feature selection, random variables help assess the importance of different features. Techniques like Random Forests and Gradient Boosting use randomness to evaluate feature significance, improving model accuracy and robustness. Feature engineering also benefits from random variable-based methods, such as Principal Component Analysis (PCA), which relies on random sampling to identify key components.

- Model Evaluation and Validation

Random variables are integral to model evaluation techniques, including cross-validation and bootstrap methods. Cross-validation uses random subsets of data to assess model performance, ensuring that results are not biased by specific data splits. The bootstrap method, which involves resampling with replacement, estimates the accuracy and stability of models, providing insights into their generalization capabilities.

- Regularization Techniques

Regularization methods like dropout and noise injection introduce randomness to prevent overfitting. Dropout randomly omits neurons during training, forcing the model to learn more robust features. Noise injection adds random noise to inputs, enhancing model resilience and performance on real-world data.

Current Developments and Innovations

- Stochastic Gradient Descent (SGD)

Stochastic Gradient Descent, an optimization algorithm, incorporates random variables by using random subsets of data to update model weights. This approach accelerates training and improves convergence rates compared to traditional gradient descent methods. Recent innovations in SGD, such as Mini-Batch SGD and Adaptive Moment Estimation (Adam), have further enhanced its efficiency and effectiveness.

- Bayesian Machine Learning

Bayesian methods leverage random variables to incorporate uncertainty into model predictions. Bayesian inference updates probability distributions based on observed data, providing probabilistic predictions rather than deterministic ones. Innovations in Bayesian machine learning, such as Variational Inference and Markov Chain Monte Carlo (MCMC) methods, have expanded its applicability to complex models and large datasets.

- Generative Adversarial Networks (GANs)

Generative Adversarial Networks use random variables to generate realistic data samples. GANs consist of two neural networks—a generator and a discriminator—competing in a game-theoretic framework. The generator creates data samples from random noise, while the discriminator evaluates their authenticity. Recent advancements in GANs, including Conditional GANs and StyleGAN, have led to impressive results in image generation and other creative applications.

- Reinforcement Learning

Reinforcement Learning (RL) algorithms incorporate random variables to model uncertainty in decision-making processes. RL agents learn optimal policies through exploration and exploitation, with randomness influencing the exploration strategy. Innovations in RL, such as Deep Q-Learning and Proximal Policy Optimization (PPO), have demonstrated significant improvements in complex environments and applications.

Challenges and Future Directions

Despite the advancements, challenges remain in effectively leveraging random variables in machine learning. Ensuring reproducibility in randomized experiments, managing computational complexity, and addressing overfitting are ongoing areas of research. Future developments may focus on optimizing random variable-based techniques, integrating them with emerging technologies like quantum computing, and enhancing their applicability to diverse domains.

Conclusion

Random variables are integral to the functioning and advancement of machine learning algorithms. Their influence spans data generation, feature selection, model evaluation, and regularization. Current developments and innovations, such as Stochastic Gradient Descent, Bayesian methods, Generative Adversarial Networks, and Reinforcement Learning, highlight the transformative impact of random variables in ML. As research continues, exploring new ways to harness randomness will drive further progress and innovation in the field.