Introduction

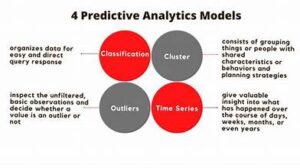

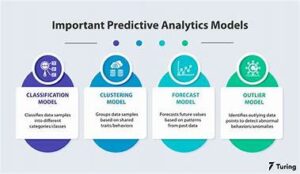

In the realm of predictive modeling, regression analysis stands out as a crucial technique for understanding relationships between variables and making forecasts. Central to this technique are regression coefficients, which play a pivotal role in shaping predictive models. This article delves into the significance of regression coefficients, their interpretation, and their applications in various fields.

What Are Regression Coefficients?

Regression coefficients are numerical values that represent the relationship between each predictor variable and the response variable in a regression model. In a linear regression model, these coefficients are estimated to minimize the difference between the predicted values and the actual values of the response variable.

The Role of Regression Coefficients

- Quantifying Relationships

Regression coefficients quantify the strength and direction of the relationship between predictor variables and the outcome. For example, in a simple linear regression model with one predictor, the coefficient represents how much the response variable changes with a one-unit change in the predictor variable.

- Predictive Power

These coefficients are essential for making predictions. In a multiple regression model, each coefficient estimates the effect of its respective predictor variable while holding other predictors constant. This allows for accurate predictions and insights into the impact of various factors.

- Model Interpretation

By examining regression coefficients, analysts can interpret the influence of individual variables. A positive coefficient indicates a direct relationship with the response variable, while a negative coefficient signifies an inverse relationship. This interpretation is crucial for understanding underlying patterns and making informed decisions.

Types of Regression Coefficients

- Standard Coefficients

Standard coefficients are used in standard linear regression models. They are derived from the least squares method and provide the direct effect of each predictor on the response variable.

- Adjusted Coefficients

In multiple regression models, adjusted coefficients account for the presence of other variables. These coefficients offer a clearer view of the unique contribution of each predictor, excluding the influence of others.

- Regularized Coefficients

Regularization techniques, such as Lasso and Ridge regression, modify regression coefficients to prevent overfitting. Lasso regression shrinks some coefficients to zero, effectively performing feature selection, while Ridge regression reduces the magnitude of coefficients to stabilize the model.

Applications of Regression Coefficients

- Business Analytics

In business, regression coefficients help in understanding factors influencing sales, customer behavior, and operational efficiency. For instance, coefficients can reveal how marketing spend or customer demographics impact revenue, guiding strategic decisions.

- Healthcare

In healthcare, regression coefficients are used to identify risk factors for diseases and predict patient outcomes. For example, coefficients in a logistic regression model can help determine how various lifestyle factors affect the likelihood of developing a condition.

- Finance

Financial analysts use regression coefficients to model stock prices, interest rates, and economic indicators. By understanding how different variables influence financial metrics, they can make better investment decisions and manage risks effectively.

- Social Sciences

In social sciences, regression coefficients assist in studying relationships between social variables, such as education level and income. This helps researchers understand societal trends and formulate policies.

Best Practices for Using Regression Coefficients

- Ensure Data Quality

Accurate regression coefficients rely on high-quality data. Ensure data is clean, relevant, and appropriately preprocessed to avoid misleading results.

- Consider Multicollinearity

When predictor variables are highly correlated, it can distort the regression coefficients. Use techniques like Variance Inflation Factor (VIF) to detect and address multicollinearity.

- Validate Models

Regularly validate your models using techniques like cross-validation to ensure that the regression coefficients are reliable and the model generalizes well to new data.

- Interpret in Context

Always interpret regression coefficients in the context of the model and the data. Consider the scale of the predictor variables and the practical significance of the coefficients.

Conclusion

Regression coefficients are fundamental to predictive modeling, offering insights into the relationships between variables and enhancing decision-making across various fields. By understanding their role and applying best practices, analysts and researchers can leverage these coefficients to build robust models and gain valuable insights.