In recent years, Bayes’ Theorem has become a pivotal tool in predictive modeling within the field of Artificial Intelligence (AI). This mathematical formula, rooted in probability theory, offers a powerful framework for understanding and predicting uncertain events. Its influence is growing, particularly as AI technologies become increasingly sophisticated. This article explores how Bayes’ Theorem is transforming predictive modeling, highlighting the latest trends and innovations in this area.

Understanding Bayes’ Theorem

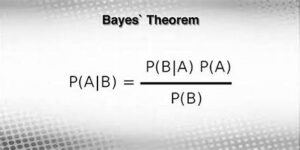

Bayes’ Theorem provides a way to update the probability of a hypothesis as more evidence or information becomes available. The theorem is expressed as:

P(A∣B)=P(B∣A)×P(A)P(B)P(A|B) = \frac{P(B|A) \times P(A)}{P(B)}P(A∣B)=P(B)P(B∣A)×P(A)

Where:

- P(A∣B)P(A|B)P(A∣B) is the posterior probability, or the probability of event A given that event B has occurred.

- P(B∣A)P(B|A)P(B∣A) is the likelihood, or the probability of observing B given that A is true.

- P(A)P(A)P(A) is the prior probability of A.

- P(B)P(B)P(B) is the marginal likelihood, or the probability of observing B.

This formula allows for dynamic updating of predictions as new data becomes available, making it especially valuable in AI applications where data and circumstances are constantly changing.

Applications in Predictive Modeling

1. Enhanced Accuracy in Forecasting

Bayes’ Theorem improves forecasting accuracy by incorporating prior knowledge and updating predictions based on new data. For example, in financial markets, Bayesian models can predict stock prices more effectively by integrating historical data and real-time market information. This results in more accurate and timely forecasts compared to traditional models.

2. Personalized Recommendations

In e-commerce and digital media, Bayes’ Theorem enhances recommendation systems by analyzing user behavior and preferences. By updating probabilities of user interests with each new interaction, AI systems can provide more personalized and relevant product or content recommendations, increasing user engagement and satisfaction.

3. Fraud Detection

Bayesian methods are widely used in fraud detection systems. By continuously updating the probability of fraudulent activities based on transaction data and user behavior, these systems can more effectively identify and prevent fraudulent activities in real time.

4. Healthcare Predictions

In healthcare, Bayesian models help in diagnosing diseases and predicting patient outcomes. For instance, Bayesian networks can integrate various patient data points (like symptoms, medical history, and test results) to provide probabilistic diagnoses and treatment recommendations, leading to more precise and personalized medical care.

Recent Innovations and Trends

1. Bayesian Deep Learning

Recent advancements have integrated Bayes’ Theorem with deep learning techniques, leading to the development of Bayesian Neural Networks (BNNs). These networks incorporate uncertainty into their predictions, providing not only a predicted value but also a measure of confidence. This approach enhances the robustness of AI models, particularly in complex and uncertain environments.

2. Variational Inference

Variational inference is a technique used to approximate complex Bayesian models. It allows for efficient computation of posterior distributions, making Bayesian methods more scalable and practical for large datasets. This innovation is crucial for applying Bayesian methods to big data problems.

3. Automated Machine Learning (AutoML)

AutoML platforms are increasingly incorporating Bayesian optimization techniques. By using Bayesian approaches to optimize hyperparameters and model configurations, these platforms can automatically improve model performance without requiring extensive manual intervention.

4. Explainable AI (XAI)

Bayesian methods contribute to the field of Explainable AI by providing transparent and interpretable models. Unlike some black-box AI approaches, Bayesian models offer clear insights into how predictions are made, which is essential for applications requiring high levels of trust and accountability.

Challenges and Future Directions

Despite its advantages, applying Bayes’ Theorem in AI is not without challenges. The complexity of Bayesian models can lead to high computational costs, especially with large datasets. Additionally, ensuring the quality and relevance of prior information is crucial, as poor priors can lead to inaccurate predictions.

Future research is likely to focus on improving the efficiency of Bayesian computations, integrating Bayesian methods with other AI techniques, and exploring new applications in emerging fields such as autonomous systems and advanced robotics.

Conclusion

Bayes’ Theorem is fundamentally reshaping predictive modeling in AI by enhancing accuracy, personalization, and robustness. Its ability to dynamically update predictions based on new data makes it an invaluable tool in various applications, from finance and healthcare to recommendation systems and fraud detection. As innovations continue to advance, Bayes’ Theorem will likely play an increasingly significant role in the development of intelligent and adaptive AI systems.