In today’s data-driven world, advanced analytics has become crucial for gaining actionable insights and driving strategic decisions. SAS (Statistical Analysis System) programming stands out as a powerful tool for performing complex data analysis and predictive modeling. This article explores how to effectively leverage SAS programming for advanced analytics, offering valuable tips and best practices to optimize your use of SAS.

Understanding SAS Programming

SAS programming is a comprehensive suite used for data management, statistical analysis, and business intelligence. It provides a robust platform for analyzing large datasets, generating reports, and performing intricate statistical computations. SAS is widely used across various industries, including finance, healthcare, and marketing, for its powerful analytical capabilities.

Key Benefits of SAS Programming for Advanced Analytics

- Comprehensive Data Handling: SAS can handle massive datasets with ease, allowing for detailed data manipulation and processing.

- Advanced Statistical Procedures: It offers a wide range of statistical procedures and models for predictive analytics, regression analysis, and hypothesis testing.

- Integration Capabilities: SAS integrates well with other software and data sources, making it a versatile choice for analytics projects.

- User-Friendly Interface: With both a programming interface and point-and-click options, SAS caters to both experienced programmers and beginners.

Tips for Leveraging SAS Programming

- Master the SAS Language: Understanding SAS programming language basics is crucial. Familiarize yourself with key components such as DATA steps, PROC steps, and macro variables. Mastering these elements will enable you to write efficient and effective SAS code.

- Utilize SAS Procedures: SAS provides numerous procedures (PROCs) for various statistical analyses. For advanced analytics, focus on procedures like PROC REG for regression analysis, PROC GLM for general linear models, and PROC ARIMA for time series forecasting. Leveraging these procedures can streamline your analysis process.

- Implement Data Cleaning Techniques: Before diving into analysis, ensure your data is clean and well-organized. Use SAS’s data manipulation capabilities to handle missing values, outliers, and inconsistencies. Functions such as PROC SORT, PROC TRANSPOSE, and DATA step operations are essential for data preparation.

- Harness SAS Macros: SAS macros are powerful tools that can automate repetitive tasks and enhance code efficiency. By creating reusable macro programs, you can streamline your workflow and reduce the likelihood of errors. Macros are particularly useful for generating reports and running complex analyses with varying parameters.

- Optimize Performance: For large datasets and complex analyses, performance optimization is crucial. Utilize indexing, efficient data storage options (like SAS datasets), and parallel processing features to improve execution times. Regularly review and optimize your SAS code to ensure it runs efficiently.

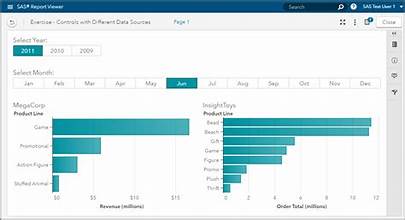

- Leverage SAS Visual Analytics: SAS Visual Analytics provides interactive dashboards and visualizations that can help in interpreting complex data. By integrating SAS programming with Visual Analytics, you can create insightful reports and share findings in a visually appealing manner.

- Stay Updated with SAS Resources: SAS continuously updates its software and releases new features. Stay informed about the latest developments by following SAS blogs, attending webinars, and participating in user communities. This will ensure you are using the most current tools and techniques available.

Best Practices for Advanced Analytics with SAS

- Define Clear Objectives: Before starting any analysis, clearly define your objectives and the questions you want to answer. This focus will guide your use of SAS tools and ensure that your analysis is aligned with your goals.

- Document Your Work: Thorough documentation is essential for reproducibility and collaboration. Comment your SAS code, describe your data preparation steps, and document any assumptions or decisions made during the analysis process.

- Validate Your Results: Always validate your results by comparing them with expected outcomes or conducting sensitivity analyses. This step helps ensure the accuracy and reliability of your findings.

- Maintain Data Security: When working with sensitive or confidential data, adhere to best practices for data security. Use SAS’s built-in security features to protect your data and comply with relevant regulations.

- Seek Feedback and Collaboration: Engage with peers and experts to review your work and provide feedback. Collaboration can offer new perspectives and improve the quality of your analysis.

Conclusion

SAS programming is a powerful tool for advanced analytics, offering a wide range of functionalities for data management, statistical analysis, and reporting. By mastering SAS language, utilizing procedures, implementing data cleaning techniques, harnessing macros, optimizing performance, and leveraging visual analytics, you can effectively leverage SAS for insightful and impactful analytics. Adhering to best practices such as defining clear objectives, documenting your work, validating results, maintaining data security, and seeking feedback will further enhance your analytical capabilities. Embrace SAS programming to unlock the full potential of your data and drive strategic decision-making.