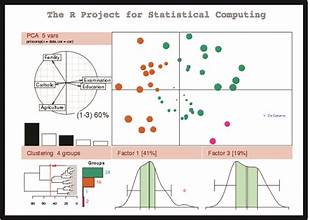

In the ever-evolving world of data science, R programming stands out as a robust tool for advanced statistical analysis. With its rich ecosystem of packages and powerful capabilities, R continues to be a top choice for statisticians, data analysts, and researchers. As we move into 2024, mastering R programming is crucial for anyone looking to excel in advanced statistical analysis. This guide explores the top techniques and tools in R programming that are set to dominate the field in 2024, helping you leverage the full potential of this versatile language.

The Evolution of R Programming

R programming has evolved significantly since its inception, driven by a vibrant community and continuous improvements. Originally designed for statistical computing, R has grown into a comprehensive language with capabilities spanning data manipulation, visualization, and complex statistical modeling. The advent of new packages and advancements in computational techniques has further enhanced its utility for advanced statistical analysis.

Key Techniques for Advanced Statistical Analysis in R

- Machine Learning and Predictive Modeling

Machine learning has become an integral part of advanced statistical analysis, and R provides a plethora of tools for this purpose. The

caretpackage is a comprehensive tool for training and evaluating machine learning models. It simplifies the process of model selection and tuning, allowing users to easily compare different algorithms.The

xgboostpackage is another powerful tool, particularly for gradient boosting. It excels in handling large datasets and complex interactions between variables. For neural networks, thekerasandtensorflowpackages offer a high-level interface to build and train deep learning models directly within R. - Bayesian Statistics

Bayesian methods have gained prominence due to their flexibility and the ability to incorporate prior knowledge into statistical models. The

rstanpackage, an R interface to Stan, is invaluable for Bayesian analysis. It provides tools for specifying complex models and performing inference through Markov Chain Monte Carlo (MCMC) methods.Additionally, the

brmspackage allows for Bayesian modeling using a formula syntax similar tolme4, making it accessible for users familiar with linear mixed-effects models. - Time Series Analysis

Time series analysis remains a critical area in statistical analysis, particularly for forecasting and understanding temporal patterns. The

forecastpackage offers a range of methods for time series forecasting, including ARIMA, ETS, and Prophet models.For more advanced techniques, the

tsibbleandfablepackages provide tools for handling time series data in a tidy framework, facilitating easier manipulation and modeling of temporal data. - High-Performance Computing

As datasets grow larger and analyses become more complex, high-performance computing becomes essential. The

data.tablepackage is optimized for speed and memory efficiency, making it a valuable tool for handling large datasets.The

futurepackage enables parallel computing, allowing users to execute code across multiple cores or machines. This can significantly reduce computation time for resource-intensive tasks. - Data Visualization

Effective data visualization is crucial for interpreting and communicating results. The

ggplot2package remains the gold standard for creating complex and aesthetically pleasing plots. Its versatility allows for the creation of a wide range of visualizations, from basic scatter plots to intricate multi-layered graphs.For interactive visualizations, the

plotlypackage provides tools to enhanceggplot2graphs with interactive features, making it easier to explore and present data dynamically.

Essential R Tools for 2024

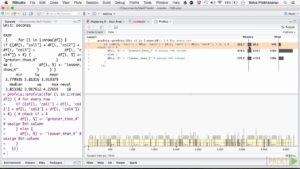

- RStudio

RStudio continues to be the premier integrated development environment (IDE) for R programming. Its user-friendly interface and robust features, such as script editors, debugging tools, and package management, make it an indispensable tool for R users.

The latest version of RStudio introduces enhanced support for R Markdown, which is crucial for creating dynamic reports that combine code, analysis, and visualizations in a single document.

- Shiny

Shiny is a powerful framework for building interactive web applications directly in R. It allows users to create dynamic dashboards and applications that can be shared with others. Shiny apps are particularly useful for visualizing and interacting with complex datasets, providing a user-friendly interface for exploring data.

- R Markdown

R Markdown remains a key tool for reproducible research. It enables users to create documents that integrate R code with narrative text, producing reports, presentations, and interactive documents. The latest updates to R Markdown include improved support for HTML and PDF outputs, making it easier to generate professional-quality documents.

- Bioconductor

For those working with genomic and bioinformatics data, the Bioconductor project provides a vast array of packages for analysis. Tools such as

DESeq2for differential expression analysis andGenomicRangesfor manipulating genomic intervals are essential for researchers in these fields. - GitHub Integration

Integration with GitHub facilitates version control and collaboration on R projects. The

usethisandghpackages streamline the process of creating and managing GitHub repositories from within R, making it easier to track changes and collaborate with others.

Best Practices for R Programming in 2024

- Code Efficiency

Writing efficient R code is crucial for handling large datasets and complex analyses. Utilizing vectorized operations and avoiding loops where possible can significantly improve performance. The

profvispackage can help identify bottlenecks in code, allowing users to optimize their scripts. - Documentation and Reproducibility

Documenting code and ensuring reproducibility are essential practices for effective data analysis. Using R Markdown for reports and including detailed comments in code helps maintain clarity and allows others to understand and reproduce results.

- Continuous Learning

The field of data science and R programming is constantly evolving. Staying updated with the latest packages, techniques, and best practices through online courses, webinars, and community forums is essential for maintaining proficiency and leveraging new advancements.

Conclusion

Mastering R programming for advanced statistical analysis in 2024 involves leveraging a range of techniques and tools that enhance data manipulation, visualization, and modeling capabilities. By incorporating the latest advancements and best practices, data scientists and statisticians can perform sophisticated analyses and derive meaningful insights from complex datasets. Whether you are a seasoned professional or a newcomer to the field, staying abreast of these developments will ensure that you remain at the forefront of statistical analysis and data science.